Spear phishing: how modern attacks really work

It's 9:12 a.m., and you open an email that looks routine. It references a real invoice, mentions a same-day cutoff, and asks for updated payment details. A few minutes later, a follow-up text arrives. Then a Teams message. Nothing feels off, but it turns out that it's a spear phishing attack from a hacker.

Instead of broad, generic emails sent to thousands of people with an obviously malicious link, attackers now run tailored spear-phishing attacks aimed at specific individuals. They use OSINT and AI to study your company, mimic real communication patterns, and personalize messages that blend seamlessly into daily workflows.

In this article, we'll discuss how modern spear phishing scams actually work and how different roles should respond.

What is spear phishing?

Spear phishing is a personalized social engineering attack in which an attacker uses personal or organizational details, often gathered via open-source intelligence (OSINT), to craft a believable message that prompts a targeted person to take a specific action—usually to click, log in, pay, share data, or change access. Today, threat actors use AI to tailor messages (or follow-ups) to match real communication patterns.

Classic phishing is still the "send it to everyone and hope someone bites" play, centered around a single generic message blasted to thousands of inboxes, optimized for volume (think, "Your mailbox is full—log in now").

Spear phishing is more targeted. The attacker chooses one person (or a small group), researches them, and writes a message tailored to their role and current context, optimized for precision (e.g., "Hey—quick review needed before the board deck goes out").

And then there's whaling/BEC (business email compromise), which is essentially spear phishing targeting high-impact roles, such as executives, finance, and procurement. The goal is typically to move money or gain high-value access through invoice fraud, payroll redirects, gift-card scams, or "we changed our bank details" vendor requests.

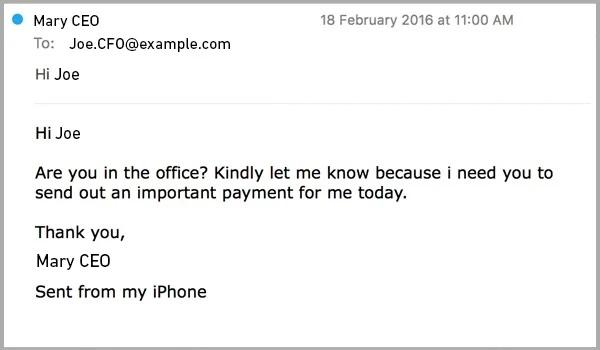

Here's what this looks like in real life. It's a classic CEO fraud spear phishing attempt—a short, urgent note aimed at a finance leader to trigger a payment before anyone slows down to verify.

Why personalization makes spear phishing harder to catch

Traditional email filters are effective at catching the obvious, including known-bad domains, suspicious attachments, and mass-sent spam patterns.

Spear phishing slips past because instead of an email that screams "scam," you get something like this:

Subject: Re: PO 18427 — updated banking detailsBody: "Hey [your name]—quick heads up, we moved to a new account this month. Can you send today's payment to the updated bank info in the attached PDF? We'll be charged a late fee if it misses the cutoff."

Nothing appears sketchy or unusual. It matches a real workflow, including a vendor thread, a plausible reason, a common attachment type, and time pressure, so the risky action (changing payment details) feels routine.

How spear phishing attacks actually work today

Spear phishing usually doesn't arrive with neon-red warning signs. It shows up as a message that fits your day. It could be a document you "need to review," a vendor note that seems routine, or a quick request from someone who seems to be already in the loop.

Here's what's actually happening inside modern attacks, so you can train people to recognize and avoid them.

OSINT-driven reconnaissance and target profiling

Attackers start by collecting context about your company and your people to target them with conviction via OSINT.

If you've ever done the following, you've dipped into OSINT:

- Googled a company before an interview

- Checked someone's LinkedIn before a call

- Looked up a vendor review before buying

Attackers do the same thing, just with worse intentions and more persistence. OSINT-led spear phishing can include anything an attacker can access without breaking into your systems.

Here are some common sources cybercriminals usually pull details from:

- LinkedIn and team pages: role, manager, org structure, recent promotions, travel/conference attendance

- Company press releases/news: acquisitions, new vendors, office moves, layoffs, leadership changes

- Job listings: tools you use (Okta, Workday, Salesforce, Jira, AWS, etc.), which help them choose believable lures

- Social media: birthdays, kids' names, "OOO in Boston this week," photos that reveal office layouts, badges, or vendor logos

- Breached data and illicit markets: old passwords, phone numbers, or security-question answers (not OSINT strictly, but it gets blended into the profile)

Once they've gathered OSINT, attackers target you by role, replicating the exact type of request you routinely handle. Your brain labels it as normal, and you act fast.

For finance, that's an "invoice/bank details update before cutoff." For engineering, it usually shows up as a "security issue" that needs a quick fix because that's a normal part of your day.

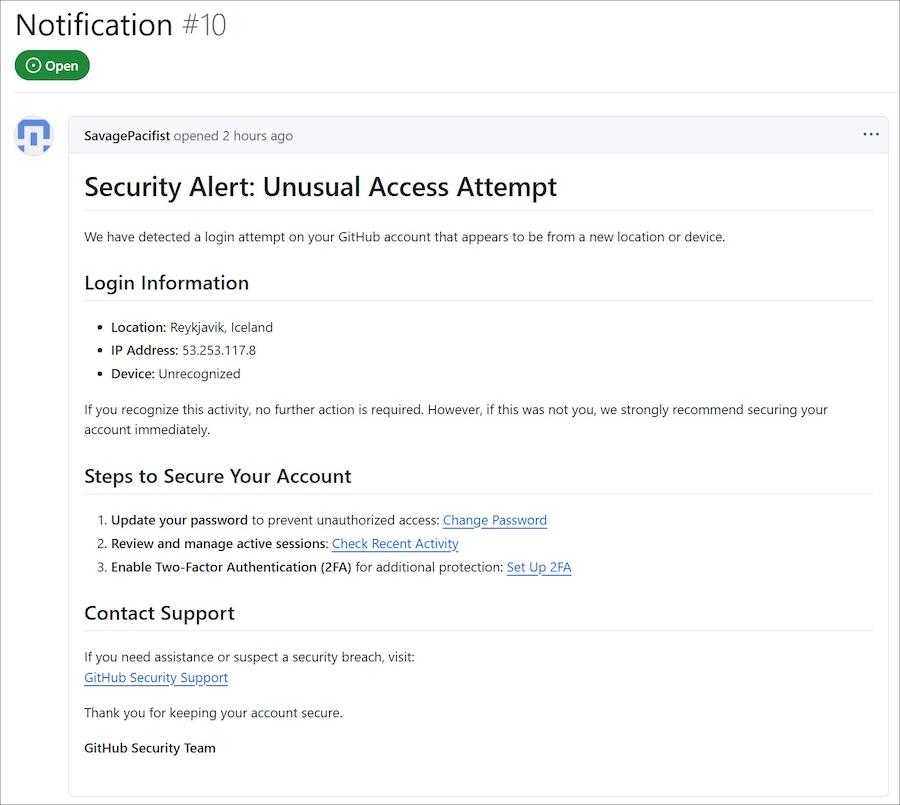

For example, in a 2025 phishing attempt, attackers posted fake "Security Alert" messages across about 12,000 GitHub projects, trying to trick developers into clicking through and granting access to a malicious app. The scam wasn't "pay this invoice." It was "handle this security task," because that's the kind of request engineers act on fast.

The same message wouldn't resonate across roles because it wouldn't align with what that person typically does.

Crafting a deceptive message

Once an attacker has a target profile, they craft a message designed to feel normal. Not dramatic or obviously scammy, just normal enough that you act on autopilot.

Here's what those look like in real life, so your reader can picture them instantly:

- Tone matching: They write as your coworker writes. If your VP always sends two-line pings, you'll get: "Quick favor—need eyes on this." If Legal writes formally, you'll get, "Per our discussion, please review the attached addendum."

- Timing patterns: They hit you when you're least likely to slow down, often minutes before a meeting, or right after a travel day. The goal is to catch you in autopilot mode.

- Internal context: They use details that make you think, "Oh, this is legit." For example, a real vendor name ("DocuSign/Workday/Okta"), a real project ("Q1 audit," "SOC2 evidence," "new SSO rollout"), or a real org event ("reorg changes," "new hire starting Monday").

- Low-friction asks: They don't start with "send me secrets." They start with something small that feels harmless: "review this doc," "approve this," "re-auth," "use this code." All these actions appear harmless, but can lead to a login, approval, or code theft.

AI makes this easier, quickly producing many variations, adapting language to the recipient's role, and removing the sloppy grammar that used to be a giveaway. In a controlled study of personalized phishing, AI-generated emails performed about as well as human-expert phishing (both around a 54% click-through rate in that experiment).

Multi-channel delivery techniques

Modern spear phishing often unfolds as a conversation that hops channels, from email to SMS (also known as smishing/SMS phishing) to phone call to voicemail (sometimes with synthetic voice/video).

You get an email about an invoice or a security issue, then a text asking if you saw it, and maybe a quick Teams message or phone call saying it's urgent. Each switch adds social proof, so it feels real: "They emailed and texted and called."

Real attack patterns security teams should recognize

These are the patterns that recur in real-world incidents. In each case, the message feels routine and urgent, and questioning it is slightly uncomfortable, prompting people to move forward rather than slow down.

Executive impersonation request (financial urgency)

A message appears to come from the CEO or CFO asking for a "quick favor" before a deadline. It could involve approving a wire transfer, purchasing gift cards, or reviewing an urgent payment. The warning sign isn't the sender's email address. It's the push to skip normal approval steps and treat the request as a small, urgent favor that needs to happen right now.

Access credential harvest for internal systems

An employee receives a believable alert about SSO, email, VPN, or a tool they use daily, telling them to "re-authenticate" or "fix an issue." The giveaway is the push to log in immediately via their link, rather than opening the app yourself or checking with IT.

If you hesitate, they follow up with reminders or warnings to increase pressure and rush you into acting before you stop and verify.

Targeting new hires with onboarding-themed messages

New employees get emails about benefits enrollment, policy acknowledgments, payroll setup, or training portals during their first days. The attacker relies on confusion and eagerness to do things right, knowing new hires are less confident about what is normal and who to ask.

Vendor impersonation + invoice fraud

Someone posing as a known vendor requests updated banking details or sends a revised invoice, often referencing a real project or past payment. The key behavior signal is a change request tied to money, especially when it discourages verification or claims the change is "already approved."

People don't fall for spear phishing because a message looks polished. They fall for it because it seems like a normal request that moves from email to text to Teams, followed by a quick call that adds pressure. By the time the last message arrives, responding feels easier than stopping to verify.

To avoid this, security teams should train employees to pause when a request creates urgency, skips a normal step, or asks for money or access, because these patterns recur in real attacks.

Modern security awareness training platforms like Adaptive Security run multi-channel phishing simulations across email, SMS, and voice/video, including OSINT-based scenarios and executive impersonation, so employees practice the exact moment they need to look out for, rather than reacting on autopilot.

How AI and deepfakes are changing spear phishing

Attackers now impersonate people you work with and write as if they understand your job. Instead of guessing what might trick someone, AI lets them personalize messages and adjust them if the first attempt fails. That shift is what makes today's attacks harder to spot and easier to fall for.

AI-powered personalization at scale

AI enables attackers to personalize messages by automating time-consuming steps. Instead of manually researching a single employee, they can feed a generative AI tool like ChatGPT a LinkedIn profile, a job title, recent company news, and a few public posts, and receive a role-appropriate message in seconds.

The model adjusts tone (formal vs casual), references the right tools ("Okta," "GitHub," "Workday"), and frames the request around what that person actually does at work.

Synthetic voice and video impersonation

Synthetic voice and video deepfakes remove one of the last instincts people trust: "I heard their voice, so it must be them." Attackers can now clone a voice using short audio clips pulled from earnings calls, webinars, podcasts, or social media videos, and then use that clone in a phone call or voice note.

Video deepfakes work the same way, using publicly available footage to mimic an executive's appearance and voice on a call.

In early 2025, attackers cloned the voice of Italy's Defense Minister Guido Crosetto and used that synthetic voice to call business leaders, claiming an urgent ransom situation that resulted in at least €1 million being transferred before police intervened. This shows how convincing voice cloning can be, even without video.

Why legacy training fails here

Most traditional training teaches people to spot "phishy-looking" emails using static template examples. That falls apart when the message is clean, role-specific, and backed up by a call or voice note that sounds real.

You don't need better "spot the typo" skills. You need to build the habit of slowing down when someone tries to rush you.

Here's what to train instead:

- Treat voice/video as a request channel, not proof: Verify high-risk requests the same way you would verify an email.

- Make one rule memorable: If the request involves money, credentials, MFA, or access changes, you verify out of band. Call back using a saved number, confirm in a ticketing system, or use a known internal approval flow.

- Practice realistic scenarios. Leverage new-gen platforms like Adaptive Security to deliver AI-driven, OSINT-based simulations across email, SMS, and voice so employees rehearse the exact moments they need to stay alert.

Role-specific spear phishing defense strategies

Attackers don't send the same message to everyone. They decide who can do what within the company, then design the message around those roles. Finance receives payment requests, IT receives access and reset requests, HR receives onboarding paperwork, and executives receive "urgent" approvals.

Here's how to tackle role-specific spear phishing attempts.

For security awareness managers

As a security awareness manager, your job isn't just to teach employees to spot phishing emails. With modern spear-phishing messages looking too real, you need to teach employees when to stop and verify. This is especially important when a message asks for money, credentials, access changes, or skipping a normal process under time pressure.

Here's what to focus on:

- Train people to recognize behavioral triggers: Urgency, authority, requests to skip normal steps, and messages that move across channels.

- Use role-based scenarios: Finance should practice invoice and payment change requests. IT should practice MFA fatigue and access reset scams. New hires should practice onboarding and HR-themed lures.

- Include multi-channel scenarios: Real attacks rarely occur via email alone. Training that relies solely on email leaves people unprepared for texts, Teams messages, or calls. Use platforms like Adaptive Security to run realistic, OSINT-based simulations so employees can practice what real attacks actually feel like.

For IT and security operations

Attackers target IT teams because they can quickly access confidential information. In many organizations, help desks handle password resets, MFA re-enrollment, device approvals, and account recovery.

Attackers exploit this by calling in, pretending to be an employee who "lost their phone," "can't log in before a meeting," or "just got a new device." These attacks succeed when teams rush, skip, or apply identity checks inconsistently, such as call-back verification, ticket validation, manager approval, or secondary authentication.

To prevent attackers from exploiting these situations, IT and security teams should do the following:

- Enforce strict identity verification: Require call-back verification, ticket validation, manager approval, or secondary authentication for password resets, MFA changes, and new device approvals, even when requests sound urgent.

- Treat MFA fatigue as a warning sign: Multiple unexpected push requests usually indicate an active attack, not a confused user, and should trigger investigation rather than approval.

- Assume attackers know your tools: Job listings and public documents indicate you use Okta, Azure AD, Jira, or Workday. Alerts mentioning those tools should still be verified.

- Make reporting fast and blame-free: Make it easy to report suspicious calls, prompts, or chats without blame. Early reporting often prevents wider compromise.

For people managers and executives

Attackers rely on your authority to pressure others. Messages that appear to come from leaders are often used to push employees to act fast, approve payments, or skip verification. With voice and video impersonation, that pressure can feel even more convincing.

- Set clear expectations for verification: State explicitly and repeatedly that you expect people to verify requests, even when they seem urgent or come from leadership.

- Model safe behavior: Avoid asking for sensitive actions over chat, text, or email. What you do becomes the standard that employees follow.

- Reinforce one simple rule: Any request involving money, credentials, or access changes must go through a known verification process, no exceptions.

- Run spear-phishing simulations involving executives. Use AI-based deepfake simulations that replicate modern attacker tactics so employees can experience such cyberattacks firsthand in a safe environment. Tools like Adaptive Security come in handy here by helping you run executive impersonation scenarios that mirror how real attackers operate today.

How to reduce organizational risk for spear phishing

Spear phishing works because people are busy, and attackers push them to act fast. To reduce risk, you need multiple safeguards, so people are not forced to rely on instinct or memory when something feels urgent.

Layer technical and behavioral controls

No single tool will stop spear phishing on its own. Email security can block obvious threats, but many targeted attacks still reach inboxes.

What actually helps is layering controls so one mistake doesn't turn into a breach:

- Strong identity verification for password resets, MFA changes, and device enrollment slows down attackers who rely on urgency.

- Conditional access and MFA limit damage when credentials are stolen.

- Behavioral analytics flags things people miss, such as logins from new locations or impossible travel patterns.

Think of it like seatbelts and airbags. You don't expect drivers to never make mistakes, so you design systems that reduce damage when they do.

Continuously evolve training

Static "how to spot a phishing email" training fails because attackers don't reuse the same tricks. Today's spear phishing looks like a real work scenario, with a realistic email, followed by a text, then a Teams message or a call. AI now helps attackers quickly adjust wording, tone, and timing.

Here's what works better instead:

- Train with realistic scenarios, not screenshots of "obvious phishing."

- Practice role-based attacks to help people understand what phishing looks like in their role.

- Include multi-channel simulations that reflect how attacks move across email, SMS, chat, and voice.

This is why many teams now use platforms like Adaptive Security, which models real attacker behavior, enabling employees to practice verification habits under realistic pressure.

Build psychological safety around reporting

Most spear-phishing damage happens after the first mistake, not because of it. People hesitate to report because they're unsure, embarrassed, or think, "Maybe it's nothing." Attackers rely on that delay.

Here's what actually reduces risk:

- Make reporting the default response. If someone receives a suspicious email, text, or call, reporting it should feel as natural as replying to a message, not like escalating an incident.

- Reward early reporting, even when it's a false alarm. You want employees to report suspicions, not just confirmed attacks.

- Treat reports as data, not mistakes. Each report tells you where people are getting targeted, which roles are under pressure, and where controls or training need adjustment.

This is difficult to do manually, so it's better to use a tool like Adaptive Security. When someone reports a suspicious email, text, or call, Adaptive updates risk scores to show where targeting is increasing. It then triggers the right follow-up training or simulations, so you can reduce risk early instead of discovering it after an incident grows.

Building resilience against targeted spear phishing attacks

Spear phishing succeeds because it exploits human behavior, not just technical gaps. Attackers create urgency, impersonate trusted people, and push employees to act before they verify.

To build resilience against such sophisticated tactics, teams need to practice using realistic deepfake simulation attacks using new-gen security awareness tools. When people regularly train on scenarios that mirror real attacks, they learn to identify such scams and report without hesitation.

If you want to reduce spear-phishing risk measurably, start by testing how your organization reacts to real-world, multi-channel phishing attempts.

Attackers don't limit themselves to email, and neither should your simulations. Book a free demo to experience Adaptive's voice, SMS, and deepfake-enabled spear phishing scenarios for yourself.

FAQs about spear phishing

How do attackers gather information for spear phishing?

Attackers use open information first. They check LinkedIn, company websites, press releases, and social posts to collect personal data and job details. They also reuse information from past data breaches, like old login credentials or email formats. This helps spear phishers create personalized emails that look like they come from a trusted source.

What are the most common spear phishing techniques?

The most common techniques rely on social engineering techniques like pretexting, where attackers invent a believable work scenario, such as an urgent invoice issue or a security alert, and combine it with a strong sense of urgency.

You'll see spoofed email messages asking for payments, access resets, or document reviews. Some campaigns use text messages (smishing) or calls (vishing) to follow up. Others lead to a fake website or include malicious attachments that install malware or ransomware.

How does AI make spear phishing more convincing?

AI helps hackers scale research and write realistic messages quickly. They use it to mimic tone, reference real tools in your stack, and send messages that sound routine, such as "We noticed a Microsoft sign-in issue, please reset your password to avoid account suspension."

AI also helps attackers test what bypasses spam filters and targets known vulnerabilities. Combined with voice cloning, this fuels CEO fraud and other high-profile attacks. To sidestep this, you need layered cybersecurity controls, multi-factor authentication, and regular employee training that reflects modern spear-phishing campaigns.

As experts in cybersecurity insights and AI threat analysis, the Adaptive Security Team is sharing its expertise with organizations.

Contents