When your board asks if a deepfake could trick your company, they’re not asking about technology. Rather, they’re asking if employees are ready.

The uncomfortable truth is that most aren’t. Studies show that only 0.1% of people can accurately spot a deepfake even when they’ve been primed to look for one. That means nearly everyone is vulnerable, and cybercriminals know it.

In this article, you’ll learn why deepfakes are a growing security threat, how awareness training works in practice, what makes it effective, and how to roll it out with measurable results.

Why deepfakes are a growing security threat

Deepfakes made up 6.5% of all fraud attempts in 2023—a 2,000% increase over the past few years—and are quickly becoming one of the most dangerous tools in a cybercriminal’s playbook.

What started as simple video face-swapping has evolved into weaponized impersonations, AI-cloned voices, and full-scale disinformation campaigns. For businesses, these new attacks are already draining millions in fraud, reputation damage, and regulatory exposure.

Executive impersonation and wire fraud

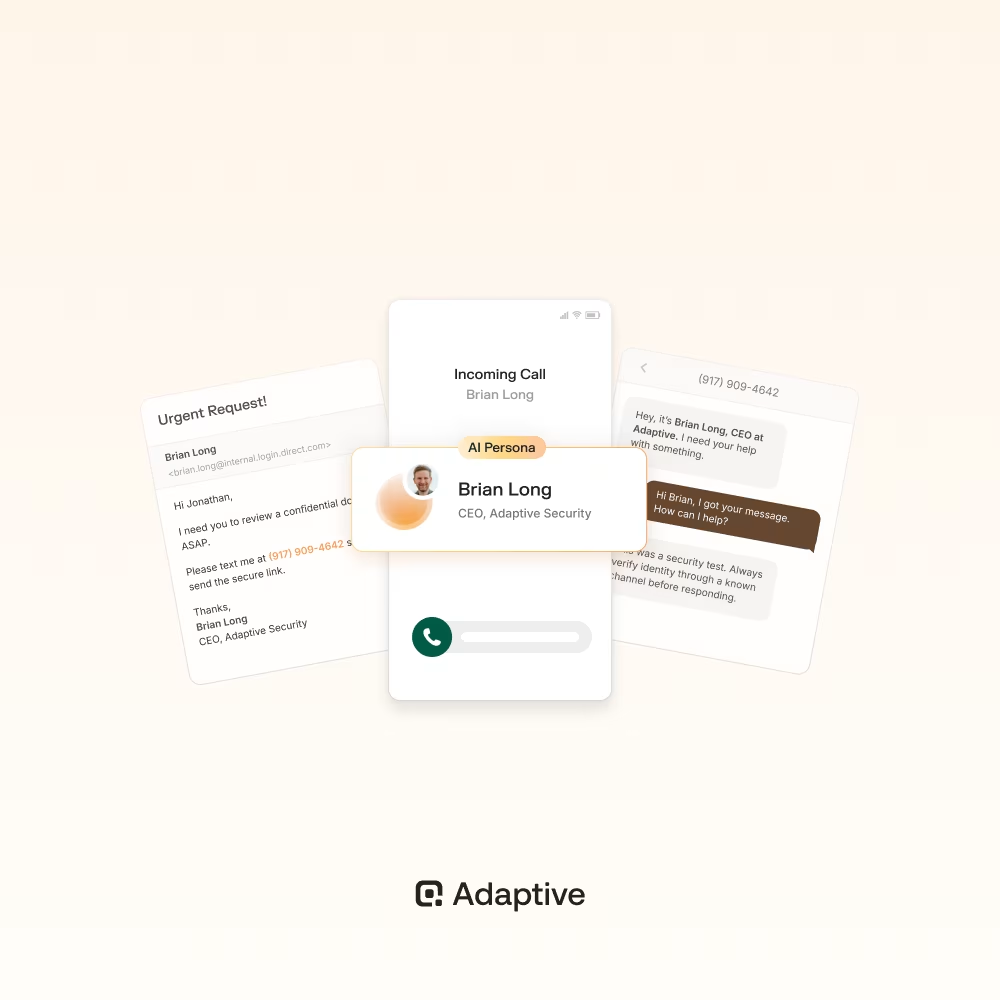

Deepfake phishing takes old-school wire fraud to a new level. Instead of just sending fake emails, fraudsters are now using generative AI to create hyper-realistic video calls or audio recordings of executives authorizing transfers. As with other types of phishing attacks, these schemes rely on exploiting trust and urgency.

Real-world consequences are already staggering. Global engineering firm Arup lost $25 million after an employee was duped during a video call with a deepfake version of their CFO. Research suggests the average cost of a deepfake fraud incident now exceeds $450,000, making it one of the costliest attack vectors organizations face today.

Voice cloning and vishing scams

Vishing (voice phishing) involves attackers using phone calls to trick people into giving up sensitive information or authorizing actions. These scams traditionally relied on generic scripts or poor-quality recordings. However, AI voice cloning has reached the point where 70% of people can’t tell the difference between a real voice and a cloned one.

Scammers exploit this with vishing calls that mimic colleagues, vendors, or even family members. A cloned CFO or HR manager can easily pressure employees into sharing credentials or greenlighting payments within minutes.

AI-augmented social engineering

Social engineering gets supercharged when paired with AI. Instead of generic phishing emails, attackers can now send fake onboarding videos from HR or WhatsApp voice notes from vendors.

This might look like a new hire receiving a deepfaked welcome video that walks them through “logging in” on a fake portal and then captures their credentials. An accounts payable clerk could also get a WhatsApp audio message, perfectly mimicking a supplier’s voice, asking them to urgently update payment details.

At a larger scale, attackers have created deepfake clips of executives making false announcements. In 2024, India’s National and Bombay Stock Exchanges had to warn investors after deepfakes of their CEOs circulated online, giving fake stock tips. This shows how easily cybercriminals can now manipulate market trust and corporate reputation.

What is deepfake awareness training?

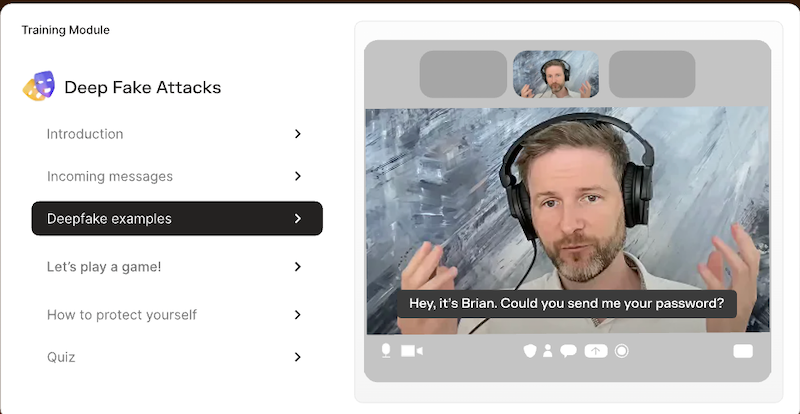

Deepfake awareness training prepares employees to spot and respond to media manipulation. This could be a fake voicemail from the CFO, a Zoom call with HR, or a WhatsApp message from a vendor. The goal isn’t to turn staff into forensic analysts, but to make them pause, question, and verify before they act.

Here’s what deepfake awareness training looks like in action:

- Beyond traditional phishing: Instead of just practicing on email simulations, employees are exposed to fake Zoom calls, voicemail scams, or WhatsApp voice notes. These AI phishing simulations mirror how attackers actually operate today. For example, finance teams might get a fake call from a CFO authorizing a wire transfer.

- Recognizing synthetic media: Staff can see side-by-side comparisons of real vs. fake clips to build their detection skills. They learn to look for subtle giveaways, such as slightly off lip sync, flat vocal tone, or lighting that doesn’t match.

- Aligning with real risks: Training is tailored to high-risk roles to help employees spot and stop the scams they’re most likely to face. Finance staff might get a simulated deepfake video call from a CFO requesting an urgent transfer, while executive assistants may get a fake voicemail from their manager requesting sensitive files.

Ultimately, deepfake awareness training is only effective if it stays current. Attackers evolve fast, so defenses must too.

New-age security awareness platforms like Adaptive Security help by delivering continuous, role-specific training that mirrors the kinds of scams people are actually facing rather than drowning employees in generic modules.

Instead of a once-a-year phishing drill, Adaptive Security runs realistic simulations in the background—like a deepfake voicemail to finance or a fake HR video to new hires—giving them hands-on practice in safe conditions.

Core components of effective deepfake training

A good deepfake awareness program isn’t just a one-off quiz or an email reminder. To actually change behavior, it needs to feel real, adapt over time, and demonstrate measurable results.

Here are the three pillars that make training effective:

Multimedia simulations (voice and video)

Email phishing tests alone won’t cut it anymore. Employees need to practice against the mediums attackers now use: fake Zoom calls, cloned voicemails, and even WhatsApp videos.

Realistic simulations create “muscle memory” so that when a cloned CFO calls late on a Friday, employees already know to slow down and verify.

One training method that's gaining traction is the use of CEO Deepfake Demos, which involves controlled exercises where staff experience what it feels like to be targeted by a hyper-realistic fake of their own executive. For example, Adaptive Security generates these demos in a secure environment using short reference samples of a leader’s speech or video. The goal isn’t to “catch” people, but to safely show how convincing an attack can sound or look. That way, the shock happens during training rather than during a real fraud attempt.

Adaptive retraining

Attackers evolve fast. Just a decade ago, it was “Nigerian prince” emails, low-effort scams asking individuals to send small payments in exchange for a promised payout later. Now, it’s deepfakes convincing enough to dupe global firms into wiring millions. Technology is advancing at a rapid pace, and if training doesn’t keep up, employees will get left behind.

For that reason, a once-a-year module won’t stick. Hermann Ebbinghaus’s Forgetting Curve shows that people can lose 50–90% of new information within a week without reinforcement. If employees only see deepfake awareness content once a year, most will be gone by the time they face an actual attack.

The fix is continuous, adaptive retraining that includes retesting employees who slip up, delivering quick refreshers in the flow of work, and gradually increasing the difficulty. Research from the University of Iowa confirms that spreading learning out over time dramatically improves retention compared to cramming it all at once.

Adaptive Security takes this approach by integrating training into everyday workflows through the quiet execution of realistic simulations and role-specific refreshers. The result isn’t overload but steady awareness that sticks, ensuring employees are ready when a real attack happens.

Dashboards to measure behavior change

If you can’t measure it, you can’t improve it. Dashboards that track click-through rates, simulation responses, and escalation actions give security teams clear visibility into progress.

Measurable results show who spotted a fake Zoom call, who clicked on a cloned voicemail, and where the weak spots are. For example, if finance staff are still failing voice deepfake tests 40% of the time, that’s a signal to focus retraining there.

Proofpoint reports that organizations using behavior-driven training see about 40% fewer clicks on real email threats within six months when they track user behavior and adapt training accordingly. The payoff is progress visibility, targeted reinforcement, and a workforce that steadily becomes harder to fool.

Implementation roadmap

Rolling out deepfake awareness training shouldn’t overwhelm your organization. The key is to start small, prove value, and then scale with measurable results. Here’s a step-by-step path to implement deepfake awareness training:

Start with high-risk departments

Decide where to launch deepfake awareness training by pinpointing the teams most likely to be targeted. High-risk areas aren’t always obvious and often depend on who controls money, is most visible externally, and interacts the most over video or voice channels.

Here’s a simple framework to map those high-risk departments in your company:

- Map roles and workflows first: List which departments handle money, approvals, vendor payments, external communications, or sensitive data. Finance, HR, executive assistants, and procurement usually come up high.

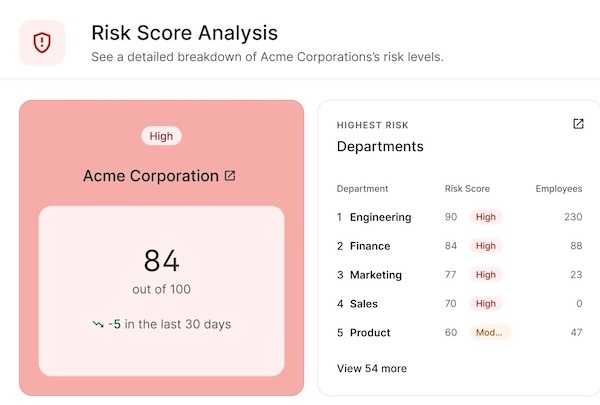

- Use Adaptive Security’s risk scoring: Adaptive Security assesses risk based on role, exposure, and past behavior (e.g., how prone people are to phishing or impersonation attempts). You can then assign risk scores to departments.

- Review incident history: Identify which teams have had suspicious payment requests, vendor fraud, or impersonation attempts in the past. Historical weak points are often future weak points.

- Consider external visibility: If someone in a team is publicly visible (C-suite, legal, PR), there’s likely more data about them available to attackers for building deepfakes. That's a risk factor.

- Assess technical exposure: Teams using Zoom, Teams, voice-based tools, vendor communications over WhatsApp, etc., are often more exposed.

Once you’ve mapped high-risk areas, start small. Pick one or two of the riskiest teams (usually finance or HR) and run your first simulations for deepfake protection training there. Watch how employees respond, track what trips them up, and fix those gaps with retraining. Once you’ve worked out the kinks and demonstrated effectiveness, roll the training out to more teams.

Integrate into SAT programs

Most companies already run Security Awareness Training (SAT) programs, including phishing email tests, password hygiene lessons, or short compliance modules. These are the regular touchpoints where employees learn how to spot and handle cyber risks.

This step should come after you’ve piloted deepfake training with high-risk groups. Once you understand what works in finance, HR, or executive support, you can integrate those lessons into the broader SAT program, benefiting the entire company.

Here’s how to make that integration practical:

- Slot it into existing cycles: If you already conduct quarterly phishing tests, consider adding a deepfake scenario (such as a fake voicemail or video call) to the mix.

- Use familiar formats: Keep it short and consistent. Follow the same style as phishing drills or micro-modules so employees don’t feel like they’re learning an entirely new system.

- Connect it to known risks: Show employees that deepfakes are just a new twist on old scams and social engineering. A fake Zoom call from HR works the same way as a phishing email, trying to trick you into giving up information or clicking a malicious link.

- Keep it lightweight: Add a short exercise or simulation, not a two-hour training block. The goal is steady awareness, not burnout.

This approach integrates deepfake awareness into the existing security culture, rather than treating it as an isolated experiment.

Report measurable outcomes

Training only matters if you can show it’s working. After testing it with high-risk teams and integrating it into your regular SAT program, track the results to see if employees are getting better at spotting and reporting deepfakes.

Clear results also provide evidence to demonstrate to leadership that the phishing training program is worth continuing and expanding.

Here’s how to make reporting useful:

- Measure behavior change: Track who spotted a fake Zoom call, who reported a cloned voicemail, and who still clicked on a simulation. These simple metrics show if training is sinking in.

- Track progress by department: Go back to the same risk scores you used at the start with Adaptive Security. If finance or HR showed up as high-risk in your initial mapping, check their scores again after training. A drop in risk score, such as fewer misses in simulations or quicker reporting, indicates that the training is actually working.

- Share numbers with leadership: Instead of just saying “employees are more aware,” report specific, measurable metrics to prove your training program’s ROI. For example, “Simulation click rates dropped from 35% to 10% in three months.” Concrete results build confidence and justify expanding the program.

The goal isn’t to prove perfection, but to show steady progress. This involves reducing the risks in currently vulnerable departments and helping employees make better decisions when faced with synthetic media.

Why Adaptive stands out

It takes just one convincing deepfake call to cost your company millions. We’ve already seen examples of AI-generated videos tricking finance teams into wiring money, fake onboarding clips fooling HR, and stock exchanges scrambling to respond to CEO deepfakes.

Training that only covers phishing emails is no longer sufficient. Employees need practice against the attacks they’re actually facing. That’s where Adaptive Security comes in.

AI-powered deepfake simulations

Attackers now use deepfake video calls, cloned voices, and manipulated clips. These are the threats that phishing drills can’t prepare employees for.

Adaptive uses AI-powered simulations so employees can practice against the same media attackers now use. This builds the kind of muscle memory that generic phishing modules can’t.

Integration with phishing & SAT workflows

Most companies already run phishing drills and short SAT modules on passwords or data handling. Adaptive adds deepfake scenarios alongside them so employees can practice everything in one program.

For example, finance staff might get a phishing email and a simulated voicemail from the CFO in the same cycle. Or a new hire could receive a fake Zoom invite from HR.

Because Adaptive ties into tools like Slack, Zoom, and Outlook, these exercises show up in the same channels people already use. That way, phishing, password hygiene, and deepfake awareness become part of one routine, not three separate programs.

Human risk scoring for boards

Executives and boards don’t just want to know that employees “did their training.” They want proof that the program reduces risk. Adaptive translates employee performance into risk scores that show which departments are most exposed, how those scores shift over time, and where retraining is paying off.

Adaptive Reporting also provides consistent, audit-ready metrics across phishing simulation failure rates, training completions, and deepfake exercise results. Security leaders can filter by department or role, push data into platforms like Power BI or Tableau via API, and schedule automated reports to give boards regular “pulse checks” without waiting for quarterly reviews.

Deepfake technology is no longer a novelty. It’s an active tool for social engineering attacks and impersonation. For chief information security officers (CISOs), the job is to stop attacks while also proving to boards that the company is prepared to fight these new-age cybersecurity attacks.

Discover how Adaptive reduces risk and strengthens your defenses against deepfake attacks. Book a demo today.

We are a team of passionate technologists. Adaptive is building a platform that’s tailor-made for helping every company embrace this new era of technology without compromising on security.

Contents

.avif)

.avif)