Deepfakes aren’t a future problem; they’re happening every five minutes, costing organizations millions. And most companies are dangerously underprepared. In 2024, 43% of cybersecurity leaders reported at least one deepfake audio attack and 37% reported a deepfake video attack.

In Q1 of 2025, deepfake phishing attacks cost North American companies over $200 million in losses and the number and severity of these attacks are only increasing. In 2024, a Hong Kong-based engineering firm lost $25.5 million over a successful deepfake of their chief financial officer.

Deepfakes are no longer just a tech novelty; they're a real organizational threat that most teams aren’t ready for.

What are deepfakes and why do they matter now?

Deepfakes are hyper-realistic, AI-generated images, videos, or audio that depict people saying or doing things they didn’t. Attackers manipulate faces or clone voices to impersonate executives or trusted contacts with startling realism.

The term deepfake combines “deep,” from deep-learning artificial intelligence, and “fake,” referring to synthetic content. It emerged in 2017 when a Reddit user created “r/deepfakes” to share videos in which celebrities’ faces were digitally placed onto performers in adult content.

Deepfake technology has grown from a hobbyist or hate tool to enterprise-grade manipulation. It’s now weaponized for impersonation, identity theft, and fraud.

The growth of generative AI has fueled the recent surge in deepfake videos and audio. The gen AI market is predicted to surge from $49.3 billion in 2024 to around $2.4 trillion by 2035, and deepfake technology will continue to evolve alongside it.

The deepfake threat landscape in 2025

Businesses are faced with a complex threat environment. A deepfake attack occurs around every five minutes, and these attacks are often more successful than other phishing attacks.

Common exploits in the wild

Deepfakes take many different forms, and cyber criminals may use multiple methods to launch an attack, including:

- Face swapping: substituting one individual’s face with another’s in a video to create a convincing imitation

- Voice cloning: generating an artificial version of someone’s voice, allowing attackers to make it say anything in recordings or real time

- Lip-syncing: modifying an existing video so it looks like the person is speaking words they never actually said

- Full-body reenactment: altering body posture and movements to fabricate gestures and actions that never occurred

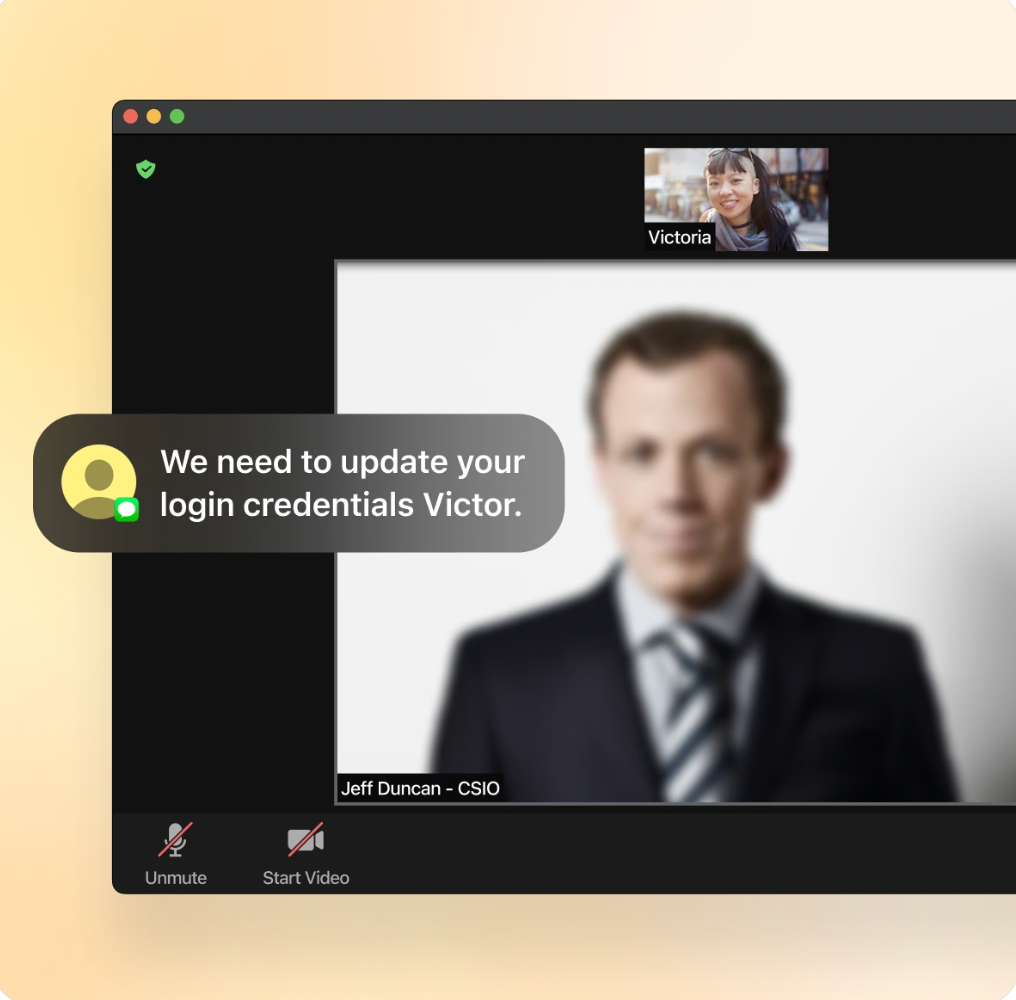

The content of these attacks ranges from AI voice scams (e.g., fake transfer approvals) to deepfake Zoom calls (e.g., executive impersonation) and HR or IT impersonation videos. Deepfakes typically target individuals within or outside an organization who control or require financial access and/or confidential information. Typically, the targeted individuals also hold a position of authority.

High-risk departments

Certain departments are especially vulnerable to deepfake-enabled social engineering due to their access to sensitive systems and authority over critical actions. These include:

- Finance: Finance departments are a prime target for invoice fraud and wire transfer scams. Deepfakes can realistically mimic executives and convince employees to authorize urgent or unusual payments.

- Human Resources: Attackers may impersonate new hires, executives, or vendors to submit fake onboarding requests or gain access to internal systems.

- Security and IT: Deepfakes can be used to deliver convincing fabricated security alerts or manipulate multi-factor authentication (MFA), potentially granting attackers system access.

Real cases to be aware of

Attacks can affect any industry at any time, from the travel industry with the 2024 Amex GBT attack to the ad space, which saw the attack on the head of the world’s biggest advertising group. Both of these attacks were incredibly sophisticated, and used methods like fake messaging accounts, voice cloning, and deepfake videos.

Why most organizations are vulnerable to deepfake threats

Traditional security awareness training can’t keep pace with rapidly evolving AI technology. Standard security awareness education is a show-and-tell; employees are simply shown examples of threats and given information. Modern threat preparedness requires behavioral training.

Employees inherently trust familiar and/or authoritative voices, faces, and video calls. Bad actors exploit this natural human behavior by creating a sense of urgency, leading to employee compliance. In these circumstances, it’s extremely difficult for any person to catch a fake.

Adaptive Security stands out from typical security awareness training (SAT) by using deepfake voice, video, and live simulation exercises to test and train employees against real attack scenarios. Legacy SAT tools fail to deliver this level of realism, leaving critical gaps in preparedness.

[Learn More About Deepfake-Proofing Your Workforce With Adaptive call out box]

4 ways to strengthen your defense against deepfake threats

From fake videos of world leaders to celebrity impersonation to insurance fraud, deepfake misinformation is everywhere. No deepfake detection algorithm is 100% accurate, so employees must be prepared to act as the human firewall.

With business deepfake cases surging, the need for a thorough defense strategy is high. With the right training, real people in your organization can identify and properly report digital manipulation.

Train employees with realistic simulations

The most effective defense against deepfake-enabled social engineering is realistic, hands-on training. Employees need to experience convincing simulations that mirror the exact tactics attackers use across voice, video, email, and messaging channels.

You can replicate high-stakes, real-world threats using company- and department-specific deepfakes. This level of immersion encourages employees to pause, verify, and respond appropriately when facing sophisticated attacks.

Adaptive Security crafts hyper-personalized deepfake content based on the company's open source intelligence (OSINT) and uses the same machine learning techniques as hackers. The platform is trusted by leading organizations across various industries, from healthcare to finance to professional sports, because it works.

When training actually feels real, employees face the reality of social engineering, recognize subtle threat patterns, and respond in a security-compliant manner. An employee who fails to recognize a fake image or video is ideal for deepfake protection education, helping them prepare for next time.

Build multi-channel verification habits

Enhanced verification protocols are necessary to protect against the use of deepfakes. Require multi-channel verification for any sensitive requests, particularly those involving financial transactions or data access. Never trust a single medium like a video call or voicemail. Always confirm through a separate, secure, pre-established channel.

The mantra is “trust, then verify.” The employee may hop on a call with the CFO, but when something feels “off”—like demanding financial information they should already possess—the employee can simply follow the required secondary confirmation paths for requests.

Since anyone can be a victim of fake content, multi-channel verification should be required for all employees, not just those with heightened risk of attack.

Develop a culture of empowerment, not blame

Technology alone can’t stop deepfakes—culture plays a critical role. Build a behavior-first security culture with:

- Non-punitive feedback loops: Treat missed deepfake detections as teachable moments, not failures.

- Empowerment over fear: Encourage employees to question, verify, and report suspicious content without fear of reprisal.

When employees are trained with realistic simulations, supported with smart verification protocols, and backed by an empowered culture, organizations can stay one step ahead of deepfake-enabled attacks.

Adaptive Security is built for the deepfake era

Deepfakes, once a digital media curiosity, are now powerful tools for fraud and executive impersonation. Detection technology alone can’t keep pace, and traditional awareness training falls short. Defending against these attacks requires realistic simulations, strong verification habits, and a security-focused culture that empowers employees.

Technology alone can’t solve the deepfake problem. That’s why Adaptive takes a behavioral-first approach combining cutting-edge simulations with real-world threat intelligence.

Request a demo and watch a hyper-realistic deepfake of your CEO.

FAQs about deepfakes

How do I know if a voice or video message is fake?

Many fake voice and video messages try to create a false sense of urgency. The attacker will request information or action immediately. There may also be unusual requests, speech, or actions from the impersonator.

Something might also just feel “off.” For example, you may see unnatural face movements or robotic-sounding speech. Use these signs to help identify false voice or video messages.

Can deepfakes bypass multifactor authentication?

Yes, they can. Attackers gather information about the target using social engineering to create realistic deepfakes that bypass MFA systems using voice, face, or behavior recognition. This highlights the critical need for ongoing user education and awareness training.

Are there tools to detect deepfakes?

While there are no tools that detect deepfakes 100% accurately, there are some that can help, such as:

- Microsoft Video Authenticator: gives a confidence score on the likelihood of a deepfake

- Reality Defender: detects manipulated content in real time

- Intel’s FakeCatcher: tracks respiration and other anatomical signifiers that identify AI video creations

These tools may help catch some obvious signs of a deepfake, but complete protection relies on informed employees. The human firewall is the best way to detect deepfakes.

What’s the best way to train against deepfakes?

Organizations can strengthen their defense against deepfakes by combining detection tools with ongoing employee education. Leading platforms include:

- Adaptive Security: uses AI-driven phishing simulations, including voice and video deepfake scenarios, to build real-world resilience across teams

- KnowBe4: a widely used security awareness platform offering phishing tests and training modules on emerging social engineering tactics

- Proofpoint Security Awareness Training: focuses on threat intelligence–driven education, helping users identify deepfake-enabled attacks

- Breacher.ai: provides deepfake and voice cloning detection tools to support security teams

As experts in cybersecurity insights and AI threat analysis, the Adaptive Security Team is sharing its expertise with organizations.

Contents

.avif)