Phishing has moved far beyond email. Today, attackers are exploiting the very platforms where companies build their brands and employees network. A fake recruiter on LinkedIn or a counterfeit brand offer on Instagram can look routine to your team—until one careless click exposes credentials and sensitive company data.

This is social media phishing. Social media platforms thrive on trust and urgency, and attackers exploit both with alarming precision. According to IBM’s Cost of a Data Breach Report 2025, phishing is responsible for 16% of all breaches at an average cost of $4.8 million per incident. It’s a cybersecurity risk that affects employees, executives, and brands alike.

In this guide, you’ll learn how phishing works on social platforms, what red flags to watch for, and what practical steps you can take—whether you’re leading a social team, responsible for security awareness, or protecting corporate accounts.

What is social media phishing?

Social media phishing is a type of phishing that uses fake accounts, deceptive messages, or malicious links on platforms like LinkedIn, Instagram, and X (formerly Twitter) to steal private information. This can include phone numbers, credit card numbers, bank account details, or other financial information.

Social media phishing works because these networks are built on trust—and attackers know exactly how to exploit that trust.

You might not notice it at first because the scams often mirror everyday interactions. For example:

- A message on LinkedIn from someone posing as a well-known industry analyst, sharing a “must-read” report that hides a credential-stealing link.

- An Instagram comment from a counterfeit brand account that encourages you to click a giveaway page.

- A direct message (DM) on X from a fake colleague account asking for help with an urgent “account reset.”

Each of these examples looks ordinary, even helpful, but in reality, they can be the opening move in a phishing scheme.

How social media phishing attacks work

Phishing on social media takes a more deceptive approach than the typical email scam. Instead of blasting out thousands of identical messages, attackers mimic the rhythm of real online interactions. They build familiarity and trust over time—then strike when your guard is down.

The process usually follows four steps:

- Step 1: Fake account creation. Attackers set up profiles that copy real people, brands, or job titles. They often steal profile photos, copy bios, and connect with mutual contacts to seem credible.

- Step 2: Building trust. Before reaching out, cybercriminals may like posts, leave supportive comments, or join relevant groups. This social “warm-up” helps them appear like a genuine connection.

- Step 3: Direct outreach. Once trust is established, the spammer sends a private message. It could be framed as a business opportunity, a request for urgent help, or a warning about account security.

- Step 4: Malicious action. The final move involves a link or file that steals login credentials, installs malware such as ransomware, or directs the target to a fake login page or malicious website.

What makes social phishing in social media different is how personal it feels. An email from a random address is easy to dismiss, but a direct message from someone who seems to share your professional circle can be disarming.

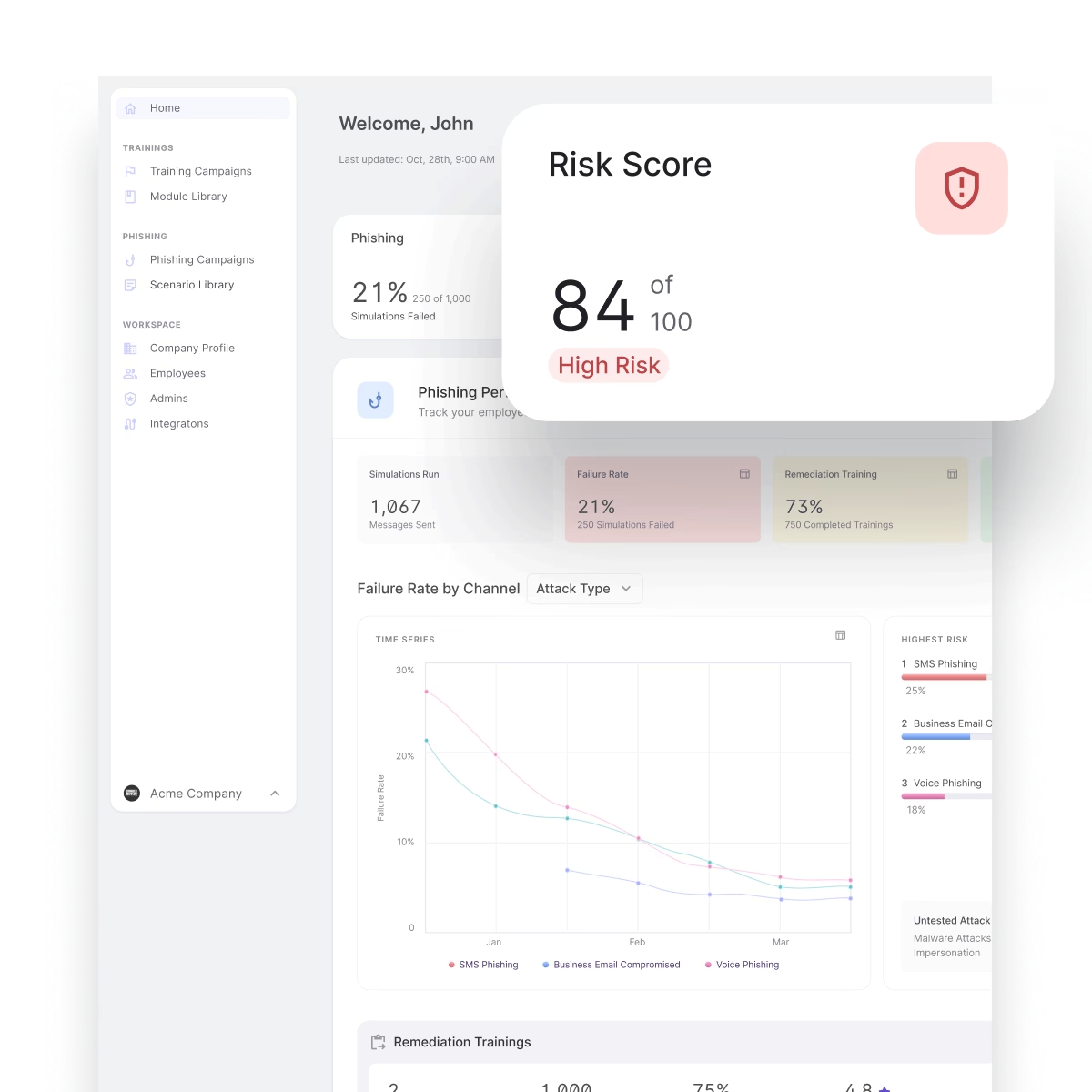

Modern awareness programs should not just train employees on email phishing, but simulate real-world social media scenarios as well. Adaptive Security uses AI phishing simulations to create LinkedIn-style messages or fake brand sponsorship offers, helping employees practice spotting risks in the environments they use every day.

A new attack surface: spear phishing through social media DMs

Direct messages (DMs) have opened a powerful new channel for spear phishing. Unlike broad phishing attempts, these cyberattacks are personalized. Phishers research their targets—looking at job titles, recent posts, or shared connections—and then craft messages that feel legitimate.

Because DMs arrive in the same inbox as genuine outreach, they’re harder to flag as suspicious. A LinkedIn message from someone posing as HR about an open role, a direct note from a fake executive asking for a quick favor, or a warning about an “account issue” on Instagram can all look routine at first glance. The personal tone makes victims more likely to act before verifying.

The rise of AI-generated impersonation makes these messages even more dangerous. According to Moonlock, AI impersonation scams surged by 148% in 2025, with deepfake voices, faces, and written messages tricking victims into revealing credentials or even wiring funds.

That combination of personalization and platform trust is what makes spear phishing through DMs especially dangerous. These messages look like everyday communication rather than scams.

Examples of social media phishing on popular platforms

The most effective way to understand social media phishing is to see how it plays out across the platforms you use every day. Each network has its own norms, and attackers design their scams to blend seamlessly into those patterns.

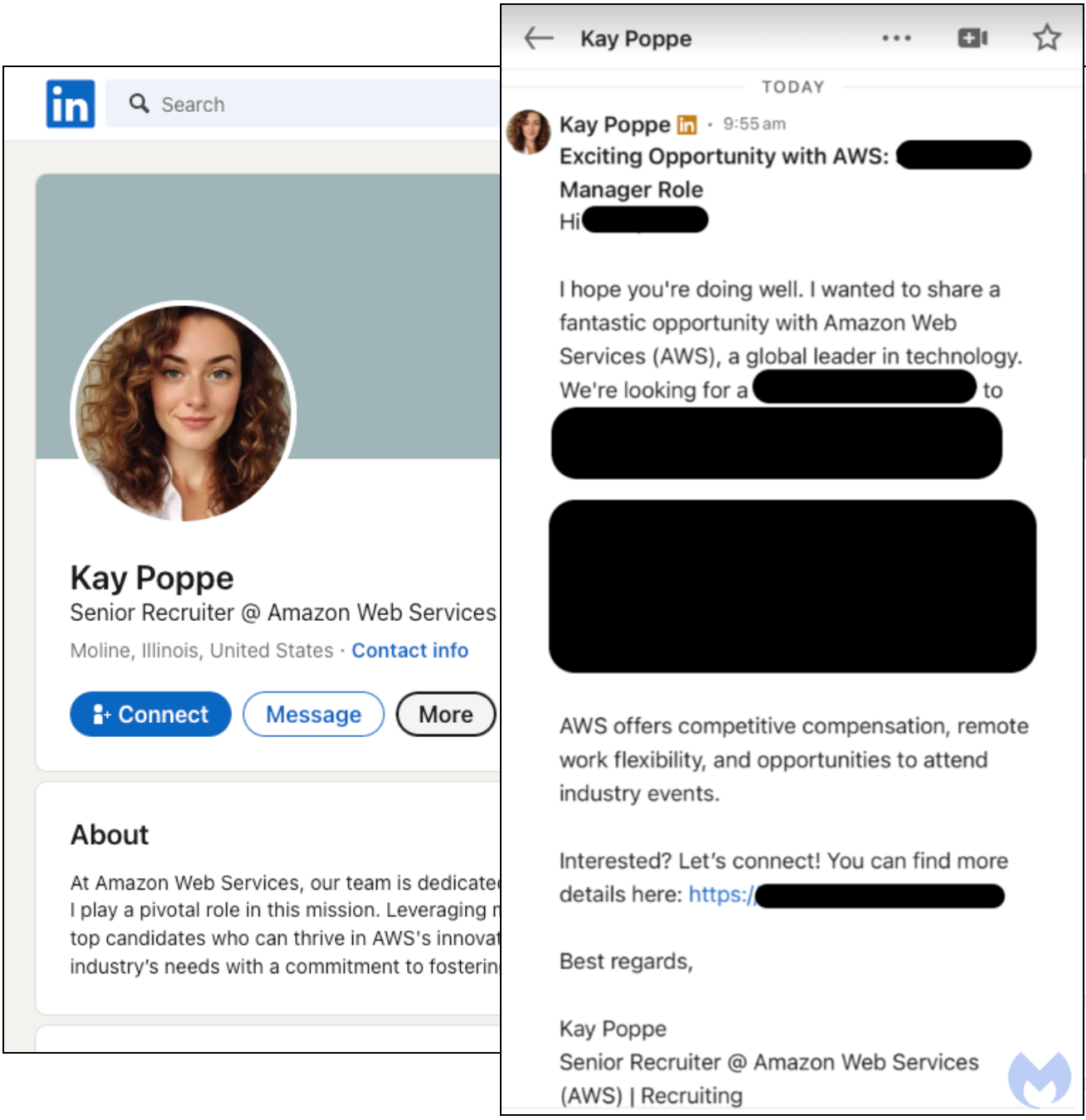

LinkedIn phishing

What makes LinkedIn especially attractive is the credibility people place in professional interactions. Employees expect to hear from recruiters, hiring managers, or executives, so fake job offers and impersonations can feel like routine interactions. That sense of trust, combined with the platform’s reach, creates the perfect environment for phishing to thrive.

In fact, in Q4 2024, LinkedIn accounted for 11% of all global brand phishing attacks, trailing only Microsoft (32%), Apple (12%), and Google (12%). That level of impersonation shows how consistently attackers exploit LinkedIn’s credibility.

In late 2024, researchers uncovered LinkedIn spear phishing campaigns where attackers posed as recruiters from well-known companies. They sent personalized job offers tailored to each target’s profile. The messages included shortened links leading to credential harvesting sites. At first glance, the profiles and messages looked authentic, making it difficult for employees to distinguish between real and fake opportunities.

Clicking the link led users to credential-harvesting pages designed to steal their login details.

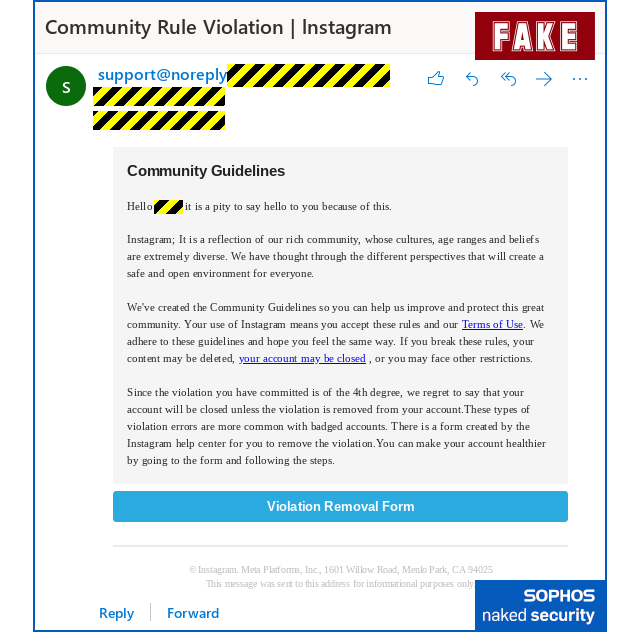

Instagram phishing

With more than 2 billion monthly active users, Instagram provides attackers with an enormous audience. The stakes are especially high for creators and businesses who depend on their accounts for income. When attackers gain access, they often use stolen credentials for identity theft, which can impact both the account owner and their followers.

A common tactic is the fake “Instagram Support” message, which claims a copyright violation or policy infraction. Victims are directed to pages that mimic Instagram’s login screen or to a fake support chat where they’re pressured into handing over credentials and authentication codes.

Increasingly, these scams are being scaled and polished through generative AI phishing, making them harder to distinguish from genuine support messages.

Influencers are also targeted with counterfeit brand sponsorship offers. These messages often include legitimate-looking logos and even formal contracts, but the links inside lead to credential theft or account takeovers. These accounts are then used to target the victim’s followers.

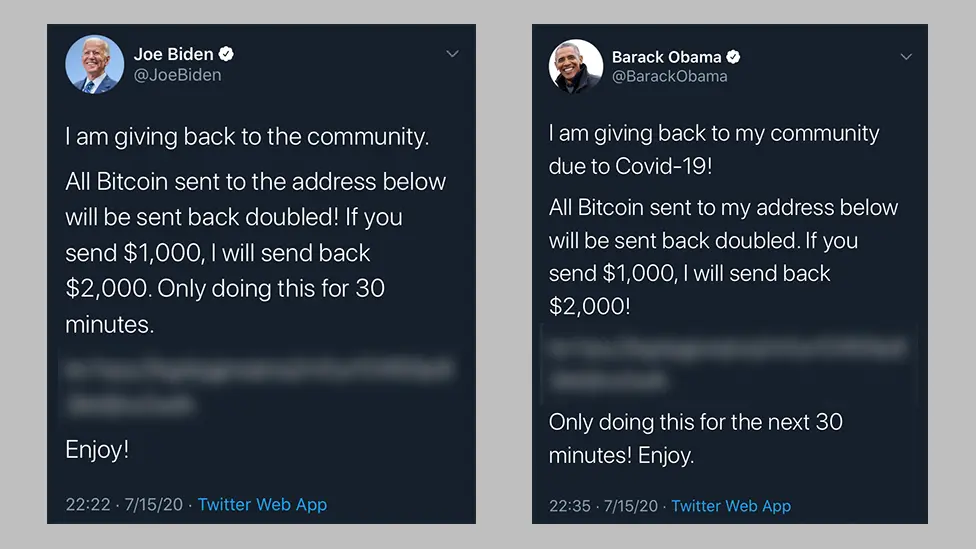

Twitter (X) phishing

Despite controversy and user churn, X still attracts around 586 million monthly users. Its fast-moving conversations and trending topics create fertile ground for attackers, who can push phishing links before moderators or fact-checkers have time to respond.

Scammers use fake support accounts to send direct messages about “suspicious activity,” luring users to phishing pages that harvest login credentials. The risks extend beyond end users: in 2020, attackers phished Twitter employees, gained access to internal tools, and hijacked dozens of high-profile accounts—including Elon Musk and Barack Obama—to run crypto scams.

Deepfake cryptocurrency schemes are another rising threat. Attackers broadcast AI-generated videos of public figures during livestreams with QR codes promising instant rewards. Thousands of viewers are targeted in minutes, making these scams both highly visible and highly profitable.

Red flags: how to recognize social media phishing

Social media phishing works because it blends in. A fake recruiter looks like any other LinkedIn connection. A “support” DM on Instagram feels like standard platform communication. Spotting these attacks is about knowing what feels "off" and why, not memorizing a checklist of typos.

Here are some of the less obvious signs that deserve a second look:

- Profile signals: Attackers often spin up new or sparse profiles. Look for stock-style headshots or job titles and endorsements that feel out of place. Another common trick is cloning a real person’s details, but with a slight variation in spelling on the name or handle.

- Message cues: Urgent wording like “immediate action required” or “last chance” is common, but so is the opposite: messages that sound oddly generic or polished, almost AI-generated. Watch for shortened URLs, links hidden in images, or QR codes that bypass link previews—these are designed to mask where you’re really going.

- Context misfits: The strongest red flag is when the message doesn’t make sense in context. A junior employee shouldn’t be asked to process a wire transfer. An influencer who never posts about brand deals won’t suddenly get a luxury partnership offer out of the blue. If the request feels out of character, slow down.

Being aware of these red flags is the first step. The second is building instincts to act on them under pressure. That’s where practice matters. Adaptive Security is built to mirror how phishing actually works today—not just in email or text messages, but across mobile and social channels.

Here’s how Adaptive Security helps train employees to recognize phishing attacks:

- It runs scenario-based phishing simulations that replicate real lures—like fake recruiter outreach or Instagram support DMs—so employees practice spotting them in a safe environment.

- Its scenario engine introduces more advanced tactics, such as impersonation attempts using open-source intelligence (OSINT) to make lures feel hyper-relevant.

- Behavioral reporting and retraining reveal which red flags employees recognized and which they missed. Adaptive also measures reductions in click-through rates, reporting speed, and retraining completion, so program owners get a clear view of progress and risk trends over time.

An IT user shared this experience with Adaptive Security: "The phishing simulations are next-level. They pull in real details from our company, so they feel incredibly convincing, and a bit scary, to be honest. It really makes people think twice before clicking. Also, the product team is quick to respond and genuinely listens to feedback, which is rare."

For social teams, employees, and executives, this kind of phishing training transforms red flags from a list into habits you can rely on. The goal isn’t paranoia—it’s pattern recognition. When that instinct kicks in, the difference between clicking and questioning becomes automatic.

Staying ahead of social media phishing threats

Social media phishing continues to evolve alongside the platforms themselves. Fake recruiters, counterfeit brand deals, and deepfake scams vary in form, but they all target the same thing: trust.

The strongest defense is awareness. When employees understand how these scams unfold, they slow down, question the context, and look twice before clicking. That pause can mean the difference between dismissing a nuisance and triggering a multimillion-dollar breach.

Security awareness training that reflects real-world social media threats gives teams instincts where they matter most. Adaptive Security provides that edge through realistic social phishing simulations. Employees learn to spot red flags in everyday communication and build resilience. Effective phishing training also reduces both the likelihood and cost of data breaches.

Ready to see how it works? Book a demo with Adaptive Security and discover how real-world phishing simulations can prepare your team for the threats they face every day.

Frequently asked questions about social media phishing

What’s the most common type of social media phishing?

The most common form is impersonation. Attackers create fake recruiter, executive, or brand accounts and message users with job offers, sponsorships, or urgent account issues. These scams look credible, making you more likely to click or share sensitive information without a second thought.

How do attackers use social media for phishing attacks?

Attackers exploit the trust built into platforms. They set up fake profiles, interact with posts to seem legitimate, and then send direct messages containing malicious links or requests. Because this happens inside familiar apps, victims are less skeptical than they might be with email.

What are the best social media phishing prevention and awareness strategies?

The most effective approach combines technical safeguards with ongoing awareness:

- Strengthen accounts with two-factor authentication (2FA), multi-factor authentication (MFA), link scanning tools, and verified badges where available.

- Train employees using security awareness platforms like Adaptive Security, which simulate realistic phishing attempts across social and mobile channels.

- Support social teams by monitoring for impersonations, setting clear escalation workflows, and reporting fraudulent accounts quickly.

Together, these steps build habits and resilience against evolving social media threats.

What are the top tools for social media phishing prevention and awareness?

Several platforms help organizations defend against phishing on social media:

- Adaptive Security: Realistic phishing simulations across email, SMS, and social platforms

- Proofpoint: Enterprise-grade threat protection with advanced filtering

- Cofense: Tools for phishing reporting, analysis, and response

- ZeroFox: Digital risk protection, including brand and executive impersonation detection

- KnowBe4: Security awareness training supported by simulated phishing campaigns

As experts in cybersecurity insights and AI threat analysis, the Adaptive Security Team is sharing its expertise with organizations.

Contents