Impersonation attacks exploit human trust, targeting senior leaders, as well as the finance and legal teams that carry out critical transactions. These scams have grown by 148% in 2025, driven by AI tools that can convincingly mimic an executive’s voice or likeness.

Global engineering firm Arup suffered such an attack, where attackers staged a video call with individuals who appeared to be multiple company executives. The convincing deepfake led to a fraudulent wire transfer of nearly $25 million. The lesson isn’t just about the scale of the loss, but how effectively social cues like authority and urgency can override normal controls.

Fortunately, these attacks are preventable. With the right preparation and security awareness training, teams can learn to slow down, question suspicious requests, and use verification processes to prevent even the most sophisticated impersonation from succeeding.

What is an impersonation attack?

An impersonation attack involves a threat actor posing as a trusted individual to manipulate someone into taking harmful action. Unlike traditional exploits that target systems or software, these attacks focus on perception, authority, and trust.

While phishing often casts a wide net with generic messages, impersonation is highly targeted. Attackers may use AI-generated spear phishing to mimic the tone, style, or even voice of an executive. This level of personalization makes the request seem authentic and feel urgent, raising the likelihood that employees will bypass normal checks.

Common attack vectors include email, phone calls, SMS, and LinkedIn. The primary targets are C-suite leaders, finance teams, HR departments, and legal counsel—all of whom have access to sensitive data or financial decision-making power.

By exploiting trusted roles and relationships, impersonation attacks often slip past technical defenses and rely on human response as the final line of defense.

5 types of impersonation attacks (with real-world examples)

Impersonation attacks exploit trust, not firewalls. They thrive when employees assume that authority, urgency, or familiarity signals legitimacy. Below are common impersonation attacks and how they’re weaponized with subtle cues against executive, finance, HR, and legal teams.

1. Email impersonation

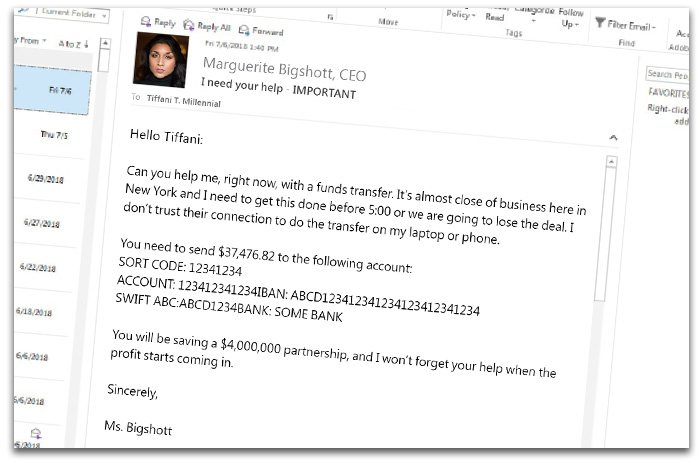

This is the classic CEO fraud email. Attackers impersonate senior leaders, directing staff to transfer funds or share sensitive data. These have evolved into AI executive email impersonation attacks, where natural language models craft convincing tone, style, and urgency to mimic a leader’s actual writing.

Real-world example: Between 2013 and 2015, a Lithuanian hacker impersonated a hardware supplier and tricked both Google and Facebook into paying more than $100 million. The attacker forged invoices, contracts, and corporate stamps to make emails look authentic, tricking finance teams at both tech companies into approving the transfers.

Adaptive Security’s training simulations expose employees to highly personalized messages like the one shown above so they practice spotting red flags before real attackers strike.

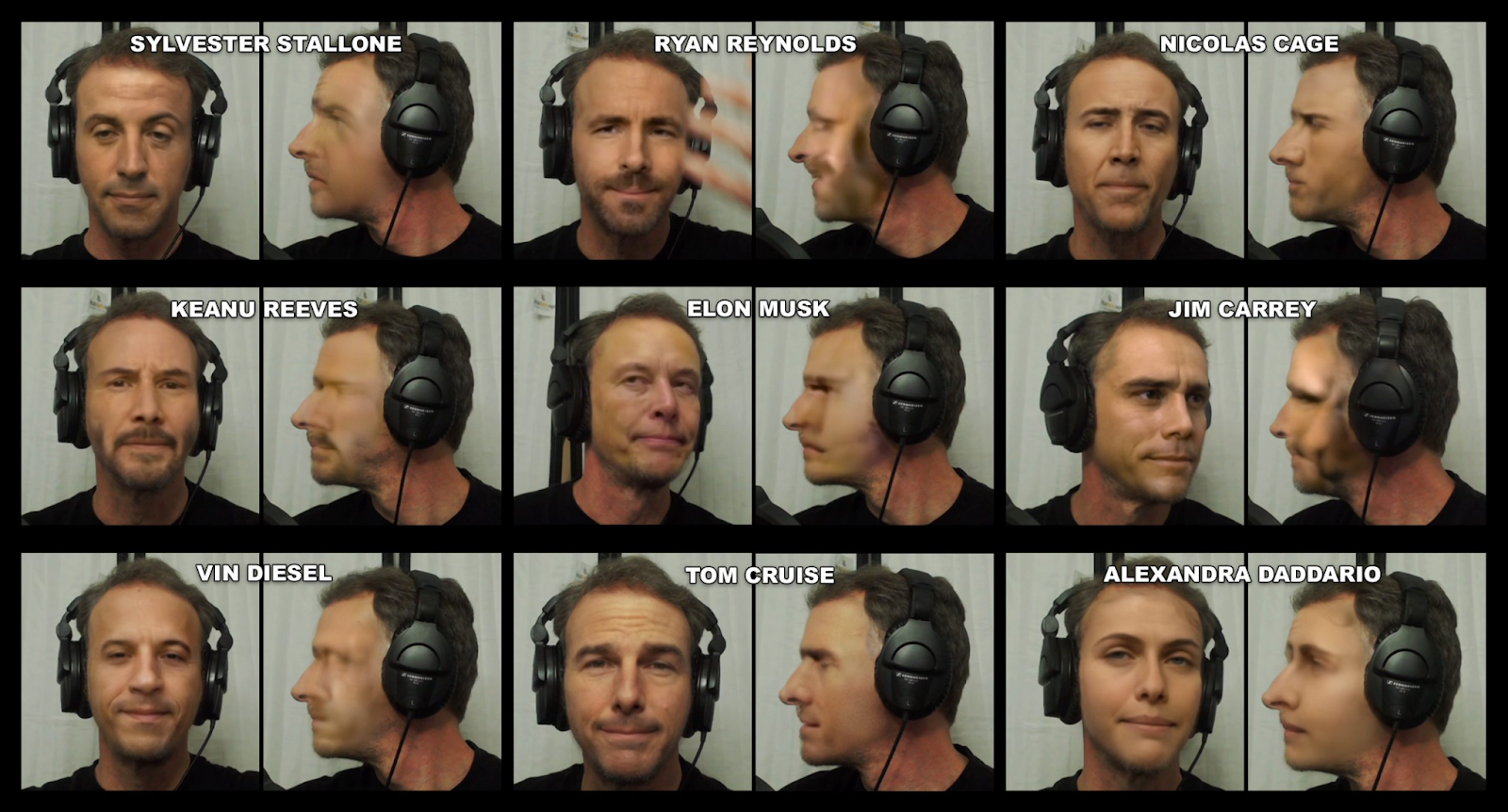

2. Deepfake voice and video impersonation

The rise of AI has opened the door to AI voice cloning scams and vishing (voice phishing), where attackers create convincing audio or video replicating an executive. These deepfake attacks often carry more authority than an email because they simulate live communication.

Real-world example: In 2024, hackers impersonated WPP’s CEO, Mark Read, and another senior executive in an elaborate deepfake scam, using a cloned voice and a staged Microsoft Teams meeting complete with visuals. Fortunately, employees detected inconsistencies and halted the fraud before any loss occurred.

Adaptive’s deepfake video call security guide helps organizations build awareness of this growing threat, equipping teams to pause, verify, and escalate suspicious requests.

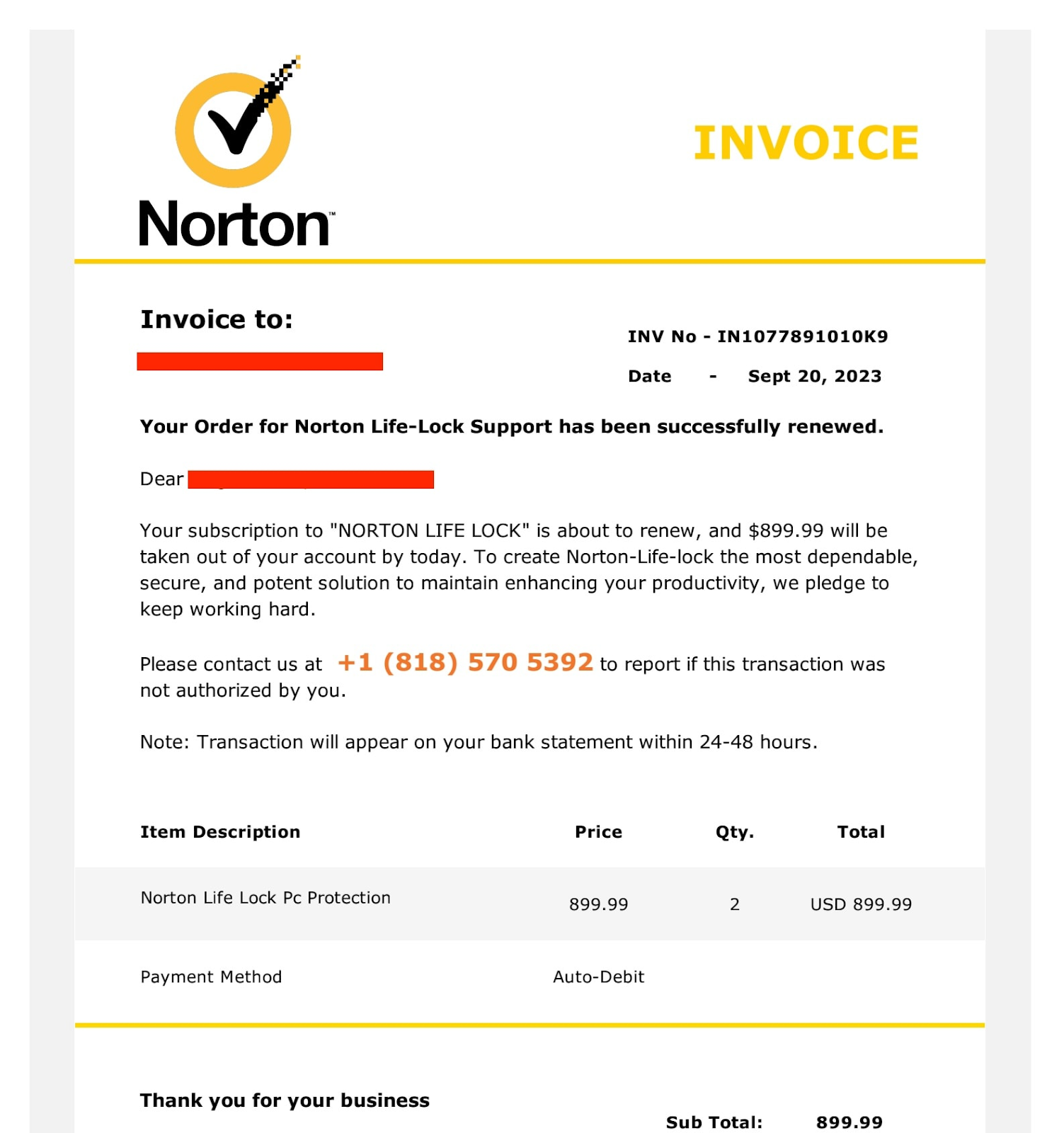

3. Vendor or partner spoofing

Attackers often impersonate external partners or suppliers with fake invoices or requests that appear routine. These scams are effective because employees want to maintain strong vendor relationships and may bypass checks when a vendor payment looks familiar.

Adaptive’s security training teaches finance and procurement teams to confirm the legitimacy of invoices through multi-channel verification before funds move, reducing the chance of fraudulent disbursements.

Real-world example: In 2021, attackers defrauded Toyota’s European subsidiary of $37 million after spoofing one of its business partners and sending a convincing payment request. Finance staff processed the request without realizing the criminals had swapped the bank account with one they controlled.

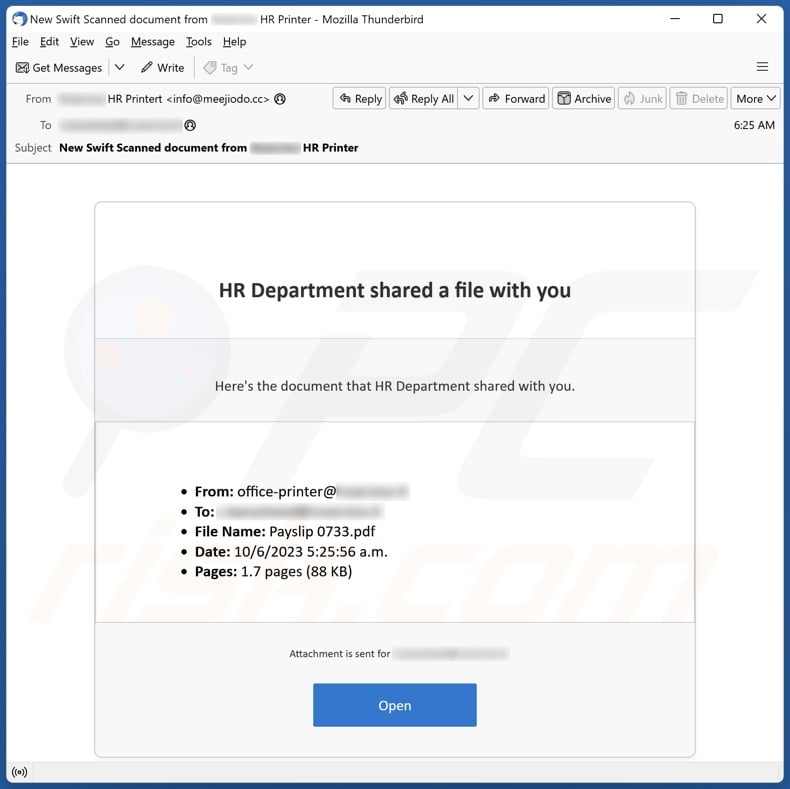

4. Internal or employee spoofing

Attackers can also pose as colleagues within the organization, targeting HR or payroll. These attacks are less about financial transfers and more about manipulating employee data. A common scheme involves a form of email spoofing, requesting updates to direct deposit information via a disguised HR email account.

Sample scenario: An HR staff member receives an urgent email that looks like it comes from a current employee. The request is simple: “I’ve just switched banks, can you update my direct deposit before Friday’s payroll run?” If they make the update without confirming through another channel, the employee’s salary is diverted directly into the attacker’s account.

Recognizing subtle anomalies in tone, formatting, and sender identity can reinforce controls that prevent data manipulation.

5. Legal or regulatory pretexting

Impersonators may claim to represent a government agency, regulator, or outside counsel. By leaning on urgency and authority, they pressure legal or compliance teams to release data or authorize actions quickly.

Sample scenario: A caller claims to be from the U.S. Department of Labor, demanding access to employee contracts for a “pending audit.” The hackers use the threat of penalties to push compliance staff into bypassing verification and handing over confidential records. These attacks succeed because few employees want to appear obstructive in the face of authority.

Why executives are prime targets for impersonation attacks

Executives command significant authority yet often face reduced scrutiny. Their decisions can unlock sensitive information, authorize large transfers, or bypass typical vetting. That influence, combined with heightened exposure, makes them especially vulnerable.

- High authority, low scrutiny: Executives’ directives carry weight, and under pressure or decision fatigue, employees may act without verifying.

- Expansive digital footprints: Public speaking, LinkedIn, and press coverage give attackers personal details that make impersonations highly convincing.

- Rising impersonation threats in 2025: A Ponemon Institute survey found 51% of security teams saw executive-targeted attacks this year.

- Gatekeeper bypassing: Executives often operate outside normal controls, making it easier for attackers to slip past filters or screeners.

Why this matters for executives

Because impersonation attacks target perception and authority rather than systems, the most effective defenses are human-centered. Training that raises awareness of digital footprints, reinforces skepticism under pressure, and builds fatigue-resistant workflows is essential.

This focus on people and process is what defines the future of enterprise social engineering defense—a shift from relying solely on technical controls to embedding resilience into daily decision-making.

How to spot an impersonation attack before it’s too late

Even the most convincing impersonation attacks leave behind subtle traces. Security awareness training platforms help employees sharpen their ability to notice cues like urgency and language inconsistencies and resist manipulation under pressure. Below are key signs and scenarios that can prevent a costly misstep:

- Unusual urgency: Messages that push immediate action, such as “transfer today,” “reply within the hour,” or “confidential and urgent,” override critical thinking. Cybercriminals exploit the human bias toward authority combined with urgency, knowing that employees are less likely to verify details when they feel rushed.

- Authority without context: An unexpected order from an executive, regulator, or vendor may bypass normal checks because of its authority. Verifying that it aligns with established processes can reveal inconsistencies that attackers hope go unnoticed.

- Subtle language mismatches: AI-generated spear phishing often misses nuance. Phrases may feel slightly off, overly formal, or inconsistent with a leader’s usual style. Training helps teams recognize patterns, turning these small discrepancies into red flags.

- Requests that skip normal channels: Attackers often try to bypass gatekeepers. A wire transfer instruction delivered directly to a junior staffer or a payroll change request sent outside the HR portal should trigger extra scrutiny.

- Deepfake voice and video cues: AI voice cloning scams and synthetic video (deepfakes) are harder to spot. Security awareness training must include exercises that expose employees to deepfake scenarios so they learn to slow down and verify through secondary channels.

The best risk management practices include requiring callbacks to known phone numbers, implementing multi-party approvals for sensitive actions, and maintaining clear escalation paths for suspicious requests.

Real-world near miss: In March 2025, a finance director at a multinational firm in Singapore nearly approved a $499,000 transfer during what looked like a legitimate Zoom call with the company’s CFO and other senior executives, all of whom turned out to be deepfakes.

The gravity of the situation hit home when the finance team verified identities afterward and realized the virtual meeting was entirely fabricated.

Together, these cues highlight that impersonation detection depends as much on human awareness as it does on technology, which is why building resilience through training is critical.

How Adaptive Security helps combat impersonation attacks

Impersonation attacks exploit trust, authority, and urgency, factors that technical defenses alone can’t catch. As they become more targeted and AI-driven, organizations need a behavior-first approach that helps employees recognize these scams in real time.

Adaptive Security delivers exactly that. With deepfake simulations, executive impersonation drills, and tailored training for high-risk departments such as finance, HR, and legal, Adaptive prepares teams to spot the subtle cues that distinguish a real request from a fraudulent one.

Unlike generic phishing training, Adaptive focuses on the human side of social engineering, equipping employees to resist even the most convincing AI-powered attacks.

Take a self-guided tour or request a demo of Adaptive’s security awareness training platform and test your defenses against the next wave of impersonation threats.

FAQs about impersonation attacks

How do impersonation attacks differ from phishing attacks?

Phishing emails and campaigns often cast a wide net with generic messages designed to trick large groups of people. Impersonation attacks, on the other hand, are highly targeted.

They exploit trust by mimicking executives, colleagues, or partners with personalized detail, often using AI to replicate writing style, voice, or appearance. This makes them harder to detect and dangerous for organizations handling sensitive data or high-value transactions.

Are impersonation attacks illegal?

Yes. Impersonation attacks fall under fraud, identity theft, and cybercrime laws in most jurisdictions. Offenders can face criminal charges, civil penalties, and restitution penalties if caught. The legal implications extend across borders, making them a top enforcement priority for agencies such as the FBI, Europol, and Interpol.

What actions can help mitigate the risk of impersonation attacks?

There are a few security measures organizations can take to minimize the risk of falling victim to an impersonation attack, including:

- Investing in security awareness training platforms like Adaptive to teach employees how to spot subtle cues

- Enforcing multi-factor authentication (MFA) and multi-party approvals for sensitive requests to strengthen email security

- Verifying unusual instructions through secondary channels (e.g., phone call to a known number)

- Limiting public disclosure of executive details that malicious actors can weaponize

- Implementing anti-malware software on all company-issued devices to reduce vulnerabilities

What are the most common tactics used in impersonation attacks?

Attackers typically lean on psychological levers rather than technical exploits. These tactics rely on authority, urgency, and familiarity to push victims into acting quickly without verification.

Common tactics include urgent or unusual requests for financial transfers, impersonating executives via email or phone, spoofed vendor invoices, and payroll redirection scams. Increasingly, attackers are using deepfake audio or video designed to simulate live communication.

As experts in cybersecurity insights and AI threat analysis, the Adaptive Security Team is sharing its expertise with organizations.

Contents

.avif)